Contributed and Invited Abstract Submissions

Contributed Talk Instructions:

Interested presenters are encouraged to submit abstracts that highlight or apply one of the workshop themes regarding defense and aerospace for the 2024 workshop. Presenting provides an opportunity to network and engage with the defense, aerospace, academic community, and industry practitioners. The Co-Chair committee will review your abstract for acceptance and appropriate placement in the program. Contributed sessions can be one of the following:

- Presentation: A presentation is 20 to 25 minutes in length. Participants will be grouped by the Co-Chair Committee into a Breakout Session.

- Poster Presentation: A print poster or e-poster presentation during the poster session.

- Speed Presentation: A “hybrid” of a presentation and poster; a speed session consists of a short oral presentation of less than 10 minutes and is followed by a poster session later the same day. *Speed Presentations are limited to Students and Fellows*

Invited Talk Instructions:

Invited speakers are nominated by the Co-Chair and Technical Program Committees. A talk should be centered around one of this years’ themes. Invited sessions include the following:

- Mini-Tutorial: A mini-tutorial is 90 minutes in length and typically involves case studies and/or applications of analysis and tools under a specific theme.

- Panel: A panel is 60 to 90 minutes session featuring a panel of subject matter experts discussing a topic related to one of the workshop themes

- Presentation: A presentation is 20 to 25 minutes in length. Participants will be grouped by the Co-Chair Committee into a Breakout Session.

- Speed Presentation: A “hybrid” of a presentation and poster; a speed session consists of a short oral presentation of less than 10 minutes and is followed by a poster session later the same day. *Speed Presentations are limited to Students and Fellows*

Guide for all Author Submissions:

To submit your abstract, please complete the form below. Titles should be no longer than 150 characters. Abstracts should be no longer than 500 words. For questions, contact dataworks@testscience.org.

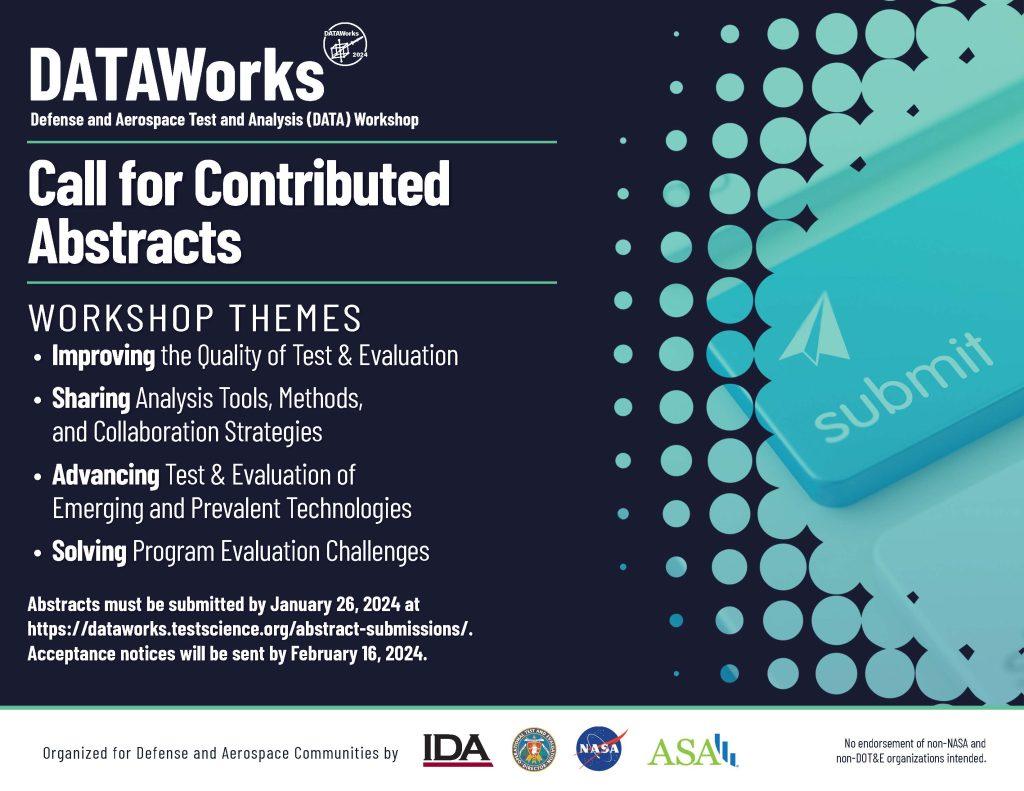

Important Dates:

- Submit an abstract using this form by January 26, 2024.

- Acceptance notifications will be sent by February 16, 2024.

- Register for the workshop by April 2, 2024.

- Submit presentation materials by April 9, 2024.

Disclaimer: PHOTOGRAPHY AND/OR VIDEOTAPING

Upon acceptance, you understand and recognize that you may be photographed, filmed, videotaped, and/or tweeted and you hereby give DATAWorks the right to take pictures and/or recordings of you. You also grant the right for DATAWorks to use your image, recording, name, and affiliated institution, without compensation, for exhibition in any medium.