Location

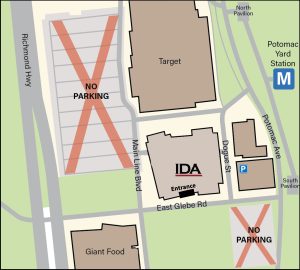

DATAWorks will be held at IDA’s Potomac Yard Location.

IDA (Potomac Yard) is located at 730 East Glebe Road, Alexandria, VA, 22305.

- Potomac Yard Metro Station is now open and within a 5 min walking distance from the IDA conference center. Public transportation is strongly encouraged.

- Paid street and garage parking are available on Dogue Street at the American Physical Therapy Association (see map). IDA does not validate external parking.

- Do not park in the Potomac Yard Center (Target) lot or empty pavement directly across from the IDA building on Seaton Ave; towing is enforced.

- Please note, there will be no IDA shuttle provided this year.

Lunch Options

Visit our Lunch Options page for a list of restaurants and their distance from IDA HQ.

Lodging

Hyatt Centric Old Town Alexandria

1625 King Street

Alexandria, VA 22314

Telephone: (703) 548-1050

Group Name: DATAWorks

Dates: April 20-24, 2026

Rate: $258

Last Day to Book: Monday, March 23, 2026

Residence Inn Alexandria Old Town/Duke Street

1456 Duke Street

Alexandria, VA 22314

Telephone: (703) 548-5474

Group Name: DATAWorks Room Block

Dates: April 20-24, 2026

Rate: Prevailing Government Per Diem Rate

Last Day to Book: Monday, March 23, 2026

Renaissance Arlington Capital View Hotel

2850 South Potomac Ave

Arlington, VA 22202

Telephone: (703) 413-1300

Group Name: DATAWorks Room Block

Dates: April 20-24, 2026

Rate: Prevailing Government Per Diem Rate

Last Day to Book: Monday, March 23, 2026

The Westin Crystal City

1800 Richmond Highway

Arlington, VA 22202

Telephone: (703) 486-1111

Group Name: DATAWorks Room Block

Dates: April 20-24, 2026

Rate: Prevailing Government Per Diem Rate

Last Day to Book: Monday, March 20, 2026