DATAWorks 2023 Agenda Day 1

April 25th Agenda

Audience Legend

1

Everyone.These talks should be accessible to everyone regardless technical background.

2

Practitioners.These talks might include case studies with some discussion of methods and coding, but largely accessible to a non-technical audience.

3

Technical Experts.These talks will likely delve into technical details of methods and analytical computations and are primarily aimed at practitioners advancing the state of the art.

7:30 AM – 8:45 AM | ||||||||||||||||||||||||||||||||||||||

Check-in 9:00 AM – 12:00 PM: Parallel Sessions Room A

|

Room B

|

Short Course 2, Part 1

() () Room C

|

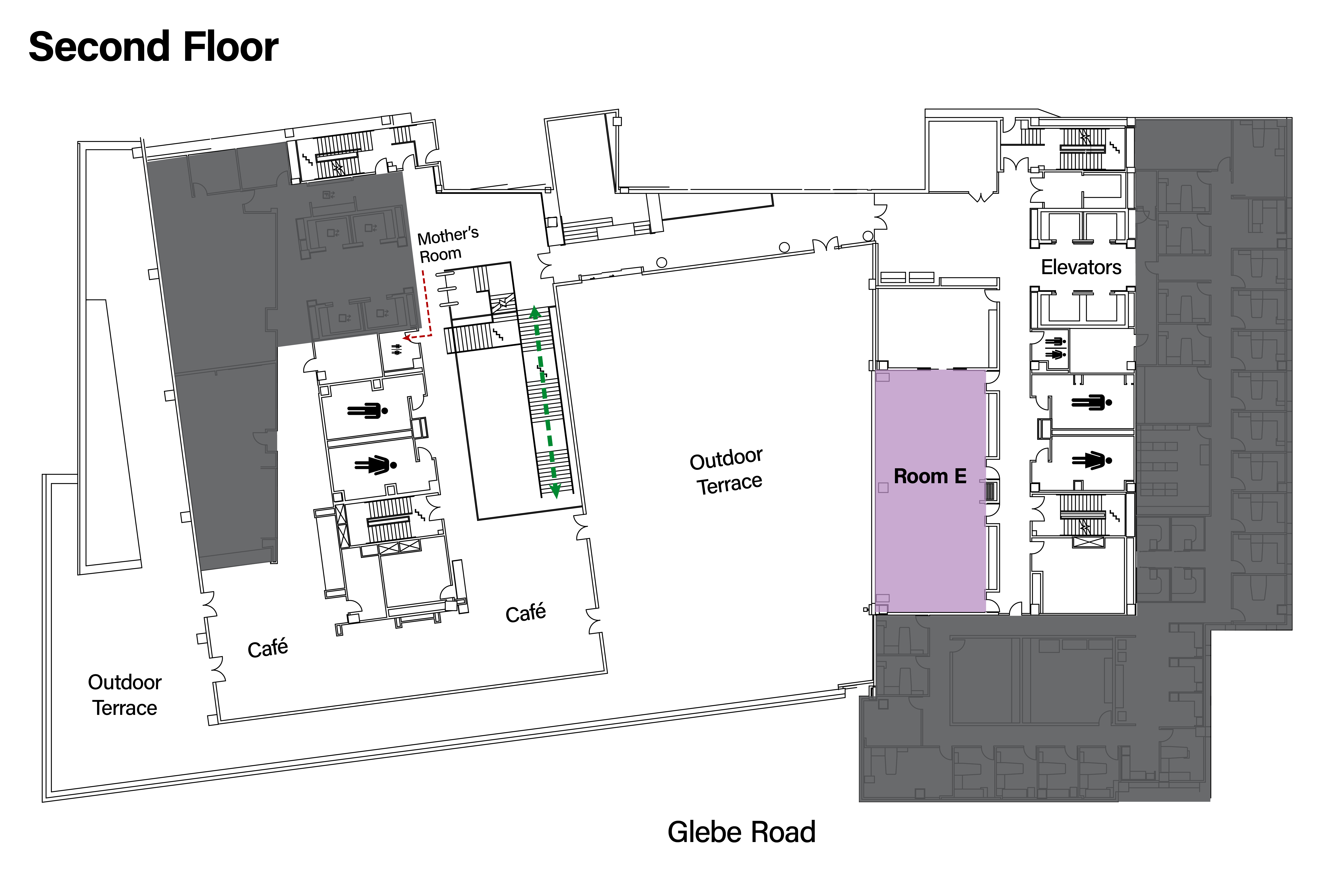

Room E

|

12:00 PM – 1:00 PM

|

Lunch 1:00 PM – 4:00 PM: Parallel Sessions Room A

|

Room B

|

Short Course 2, Part 2

() () Room C

|

Room E

|

| |||||||||||||||||||||||||||||